CSE190: Advanced Computer Graphics

Assignment 1 Report: Image and Signal Processing

Date: Spring 2015

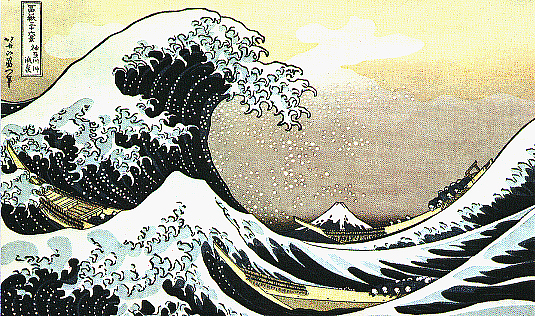

This assignment is the creation of a basic image proccessing program. It is like a mini photoshop or photo editor

Please note that all images on this website are converted to .jpg from the .bmp output of the program.

Basic Operations

Image::Brighten

command line arguments: ./image -brightness [factor] < in.bmp > out.bmp Brightness is controlled by scaling the pixels by the inputed factor. The factor must be non-negative. 1.0 preserves the image, 0.0 makes it black, and other factors scale up the pixel values.

Image::ChangeContrast

command line arguments: ./image -contrast [factor] < in.bmp > out.bmp Contrast is controlled by interpolating the pixels between a gray image of the average image luminance and the original image. A factor of 0.0 outputs the calculated constant gray image, 1.0 gives the original image, and negative values inverts the image. Values between 0.0 and 1.0 have less contrast, and values greater than 1.0 has higher contrast.

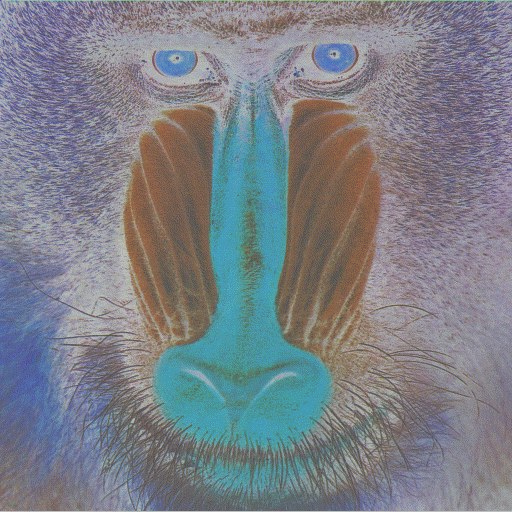

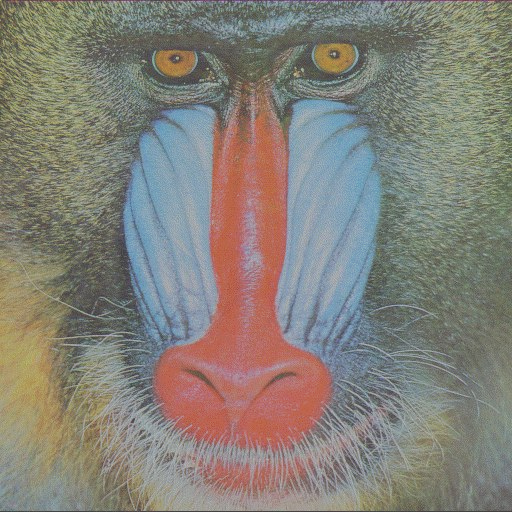

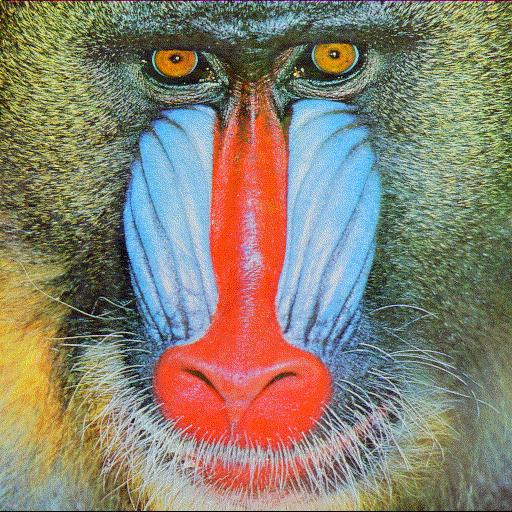

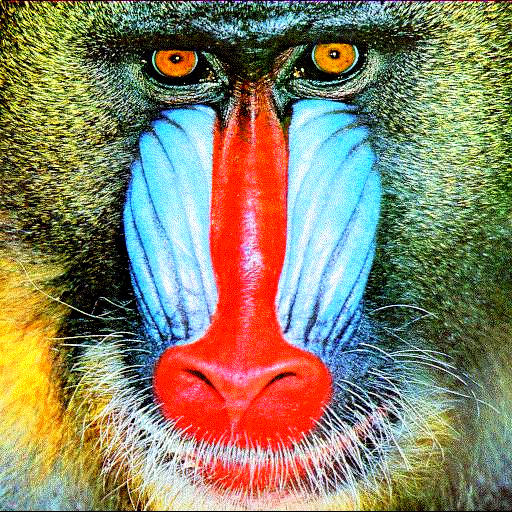

Image::ChangeSaturation

command line arguments: ./image -saturation [factor] < in.bmp > out.bmp Saturation is controlled by interpolating the pixels between a gray-scale version of the original image and the original image. The grayscale image is constructed form the pixel luminance. Negative values inverts the image hue but keeps the luminance. A factor of 0.0 outputs the calculated greyscale image, 1.0 preserves the original image, and negative values invert the colors. Values between 0.0 and 1.0 make the image more gray, and values greater than 1.0 are extrapolated to increase the saturation.

Image::ChangeGamma

command line arguments: ./image -gamma [factor] < in.bmp > out.bmp Gamma correction adjusts the color of each pixel of the image by the factor. The factor must be a postitive number. A factor of 1.0 preserves the original image, values greater than 1 brighten the image, and values less than 1 darken it.

Image::Crop

command line arguments: ./image -crop [x] [y] [w] [h] < in.bmp > out.bmp Cropping is done by selecting a rectangular section of the image at the given starting point up to the size specified. x and y are the point to begin cropping from, and w and h are the size of the output cropped image. The x value must be in the range 0 to width-1(left to right) and y must be in the range 0 to height -1 (top to bottom). All parameters must be postive.

Quantization and Dithering

For all of the Quantization and Dithering methods the number of bits must be between 1 and 8.

Image::Quantize

command line arguments: ./image -quantize [bits] < in.bmp > out.bmp For each color channel in the image I first convert the value to a floating point number p between 0 and 1, then use q = floor(p * b), where b = 2^bits. I then convert back to the 0-255 range using color = floor(255*q/(b-1)).

Image::RandomDither

command line arguments: ./image -randomDither [bits] < in.bmp > out.bmp I then added an option to add random noise to the quantizing function. THe noise ranges from -0.5 to 0.5, and is added using q = floor(p*b + noise).

Image::FloydSteinbergDither

command line arguments: ./image -FloydSteinbergDither [bits] < in.bmp > out.bmp In this algorithm the error made during quantizing is diffused to other pixels later on using the given weights:

Right = 7/16, bottomleft = 3/16, bottom = 5/16, bottomright = 1/16.

Basic Convolution and Edge Detection

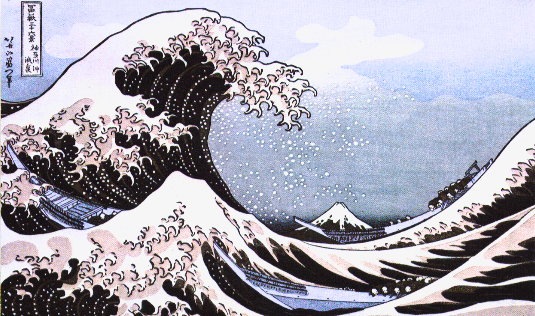

Dealing with edges

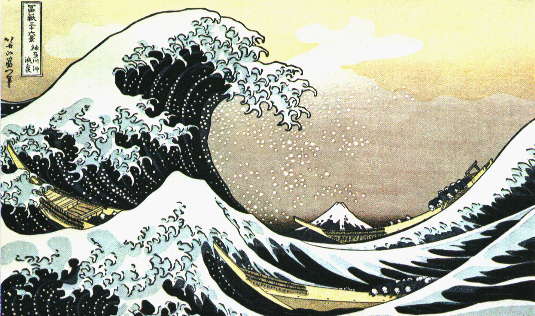

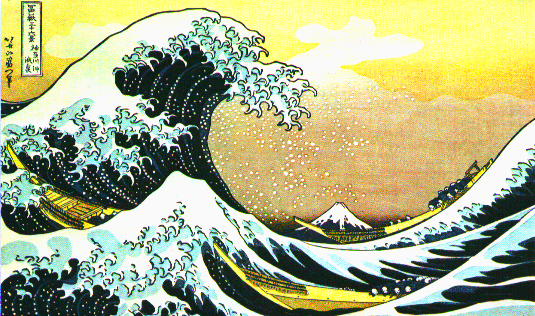

For all functions needing special cases for the edges, I initally used image wrapping. I achieved this by using a helper function to calculate a modified index if necessary.

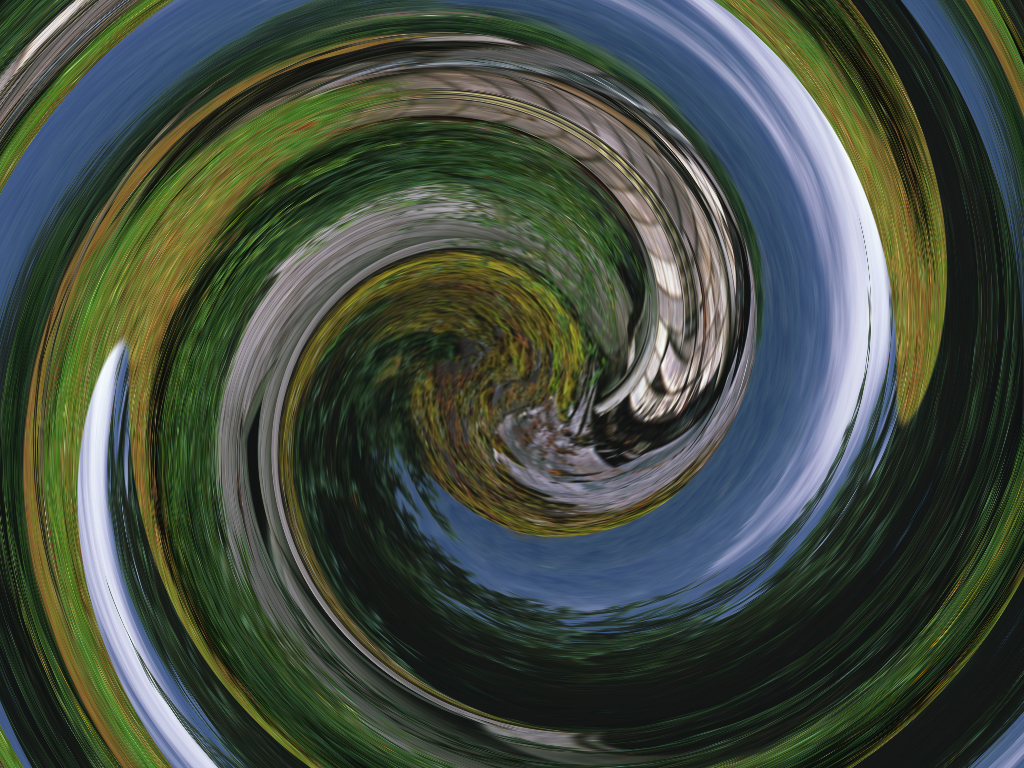

The helper function int wrapImg (int max, int idx) takes the maximum value an index can be and the current index, and returns a modified index if the current index is less than 0 or greater than or equal to the max. As you can see with the wave image this may not be ideal, since the top of the image is very light and the bottom of the image is very dark.

To deal with this, I decided to use the reflection method instead. The helper function int reflectImg (int max, int idx), which reflects out of bounds points back into the image.

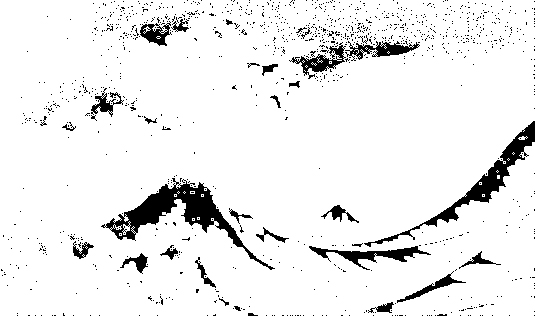

When I implemented edge detection, the helper function int reflectImg (int max, int idx), created some errors on the border which I did not like. To deal with this, I switched to a new helper function int validImg (int max, int idx) which instead only uses valid pixels in the image.

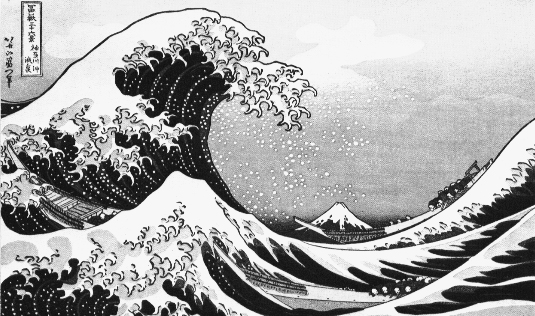

Image::Blur

command line arguments: ./image -blur [width] < in.bmp > out.bmp The blur kernel width parameter must be an odd integer. A gaussian filter with the given width is used for discrete convolution with the image. I set sigma = floor(n/2.0)/2.0 as given in the assignment.

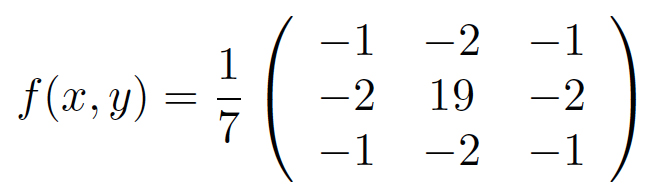

Image::Sharpen

command line arguments: ./image -sharpen < in.bmp > out.bmp I used the filter provided in the assignment writeup.

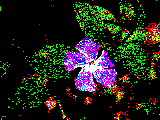

Image::EdgeDetect

command line arguments: ./image -edgeDetect [threshold] < in.bmp > out.bmp Edge detection using sobel filters

Antialiased Scale and Shift

Image::Scale

command line arguments: ./image -sampling [type] -size [w] [h] < in.bmp > out.bmp Nearest Neighbor:

Hat Filter: f(x) = 1 - |x|

Mitchell Filter:

f(0 <= |x| < 1) = (1/6)*(7|x|^3 - 12|x|^2 + 6/3)

f(1 <= |x| < 2) = (1/6)*((-7/3)|x|^3 + 12|x|^2 - 20|x| + 32/3)

Image::Shift

command line arguments: ./image -shift [sx] [sy] < in.bmp >out.bmp Nearest Neighbor:

Hat Filter: f(x) = 1 - |x|

Mitchell Filter:

f(0 <= |x| < 1) = (1/6)*(7|x|^3 - 12|x|^2 + 6/3)

f(1 <= |x| < 2) = (1/6)*((-7/3)|x|^3 + 12|x|^2 - 20|x| + 32/3)

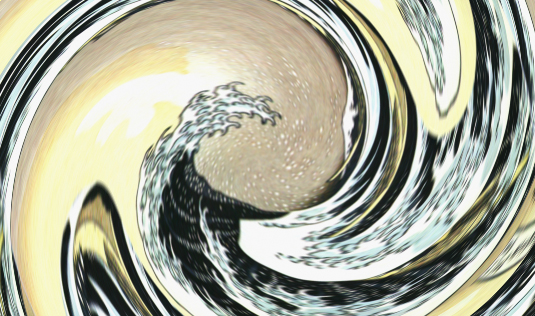

Fun nonlinear filters

command line arguments: ./image -fun < in.bmp >out.bmp I decided to implement a swirl effect. I use the mitchell filter for anti-aliasing. I searched for math equations for the swirl effect online and found several different ones. All of these left black pixels where there were no image pixels to sample, but I instead used my sampling method which uses the wrapping method. The function I chose to use to transform the pixels is newX = x*cos(angle) - y*sin(angle) +xcenter and newY = x*sin(angle) + y*cos(angle) + ycenter and r = (x*x + y*y) where angle is a function of r.